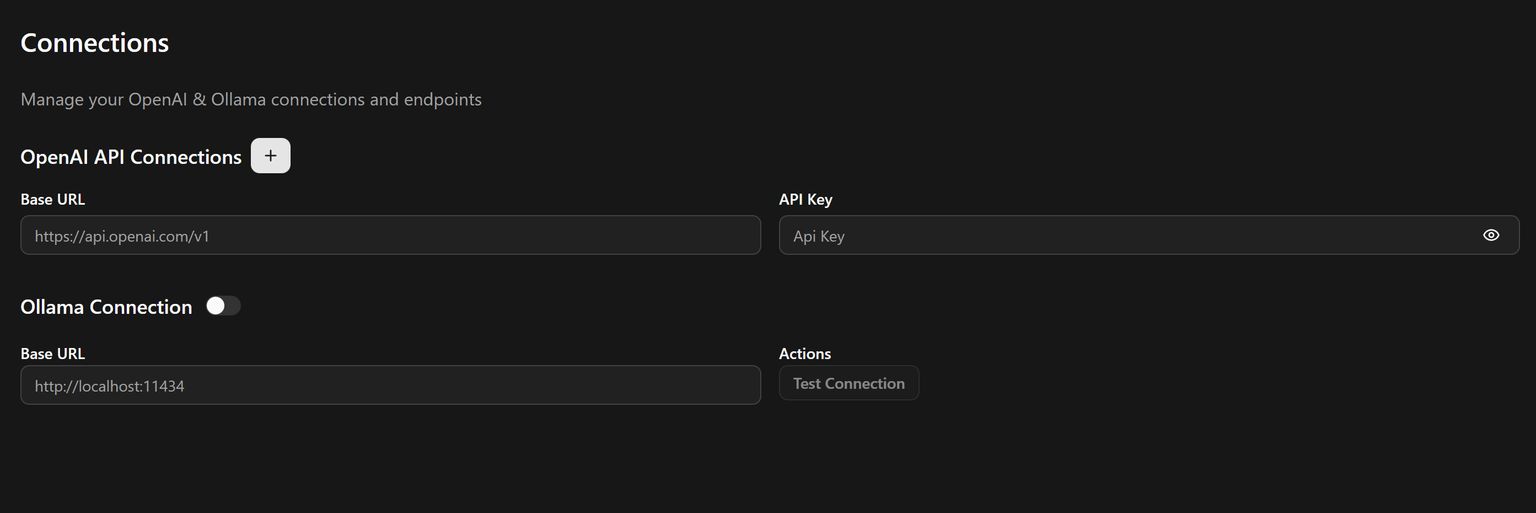

Connections

Connect to OpenAI, OpenRouter, and Ollama; models are automatically synced across APIs. You can also use Hugging Face GGUFs.

OpenAI Compatible APIs

Use this section to connect to OpenAI-compatible providers. Common options include:

- OpenAI: Create an API key in your OpenAI account and paste it here. See OpenAI.

- OpenRouter: Use your OpenRouter key to access multiple upstream models through a single interface. See OpenRouter.

Configure the provider, add your API key, and select your preferred default model.

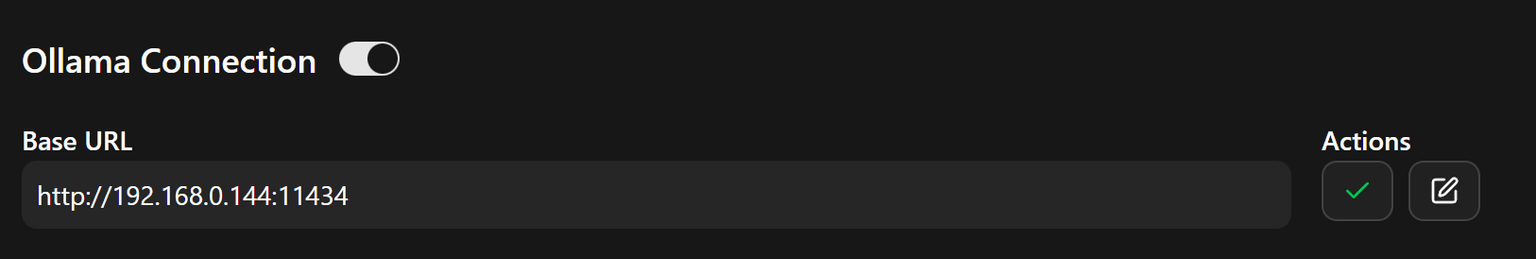

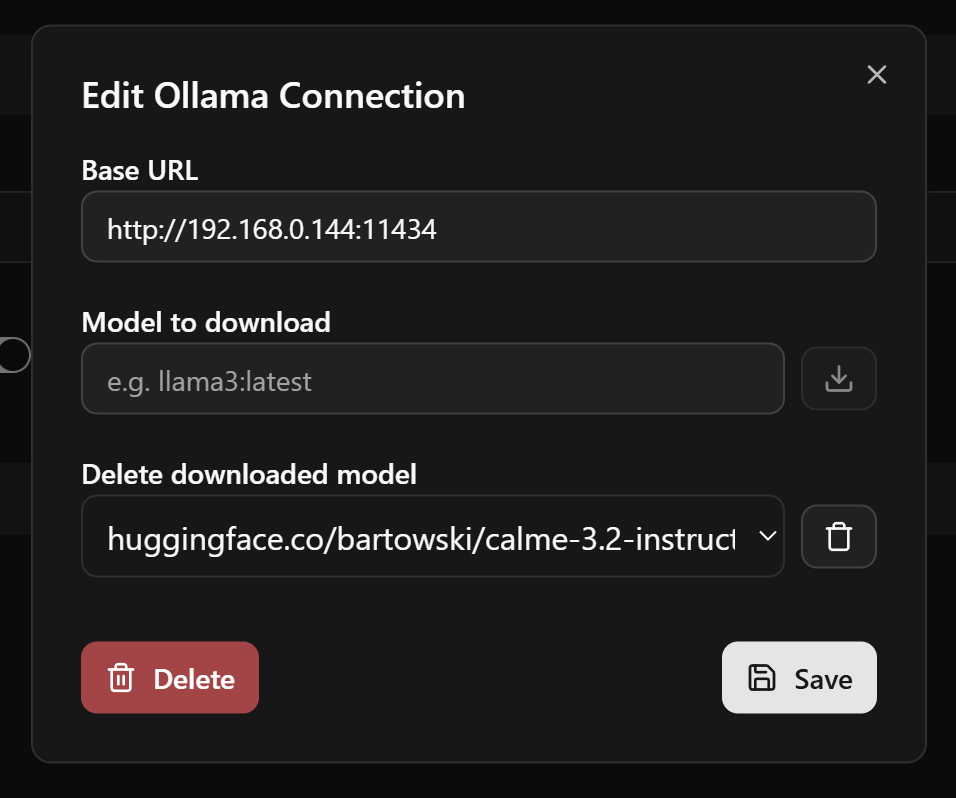

Ollama

Connect to a local Ollama instance. Enter the base URL if different from the default, then click Test connection to validate and save the configuration.

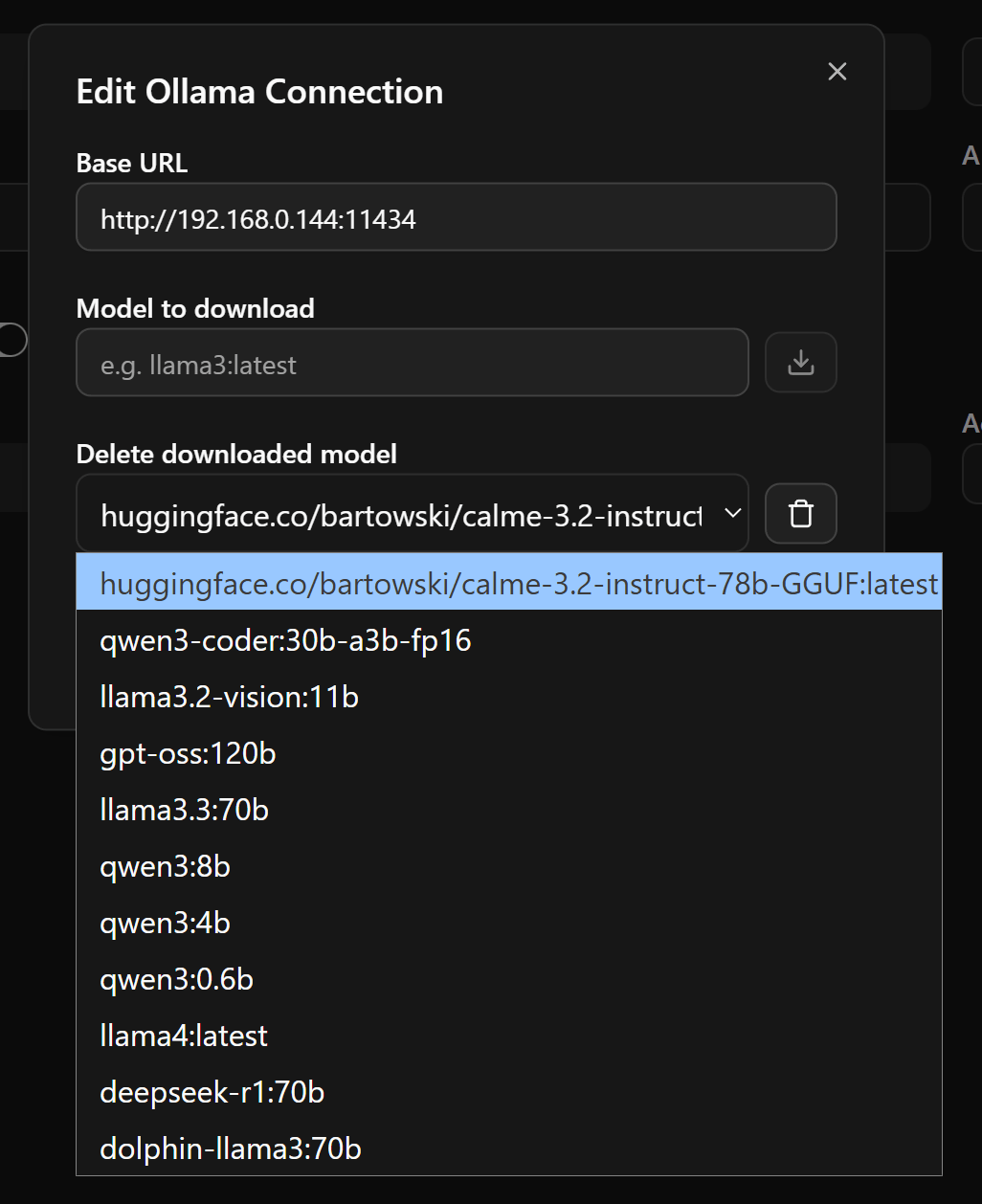

Download models

Browse available models and download them locally so they are ready to use.

Delete models

Remove models you no longer need to free up disk space.

Hugging Face (GGUF)

Download GGUF models from Hugging Face — see the GGUF models catalog. Use them with Ollama by creating a model from a GGUF via a Modelfile. Models are large; ensure enough disk space.